Rendering Blender scenes in the cloud with AWS Lambda

JR Beaudoin4 min read

You can fairly easily leverage AWS Lambda to render scenes with Blender. It can make sense to use Lambda functions if you need to render a large number of assets in little time and if each asset is simple enough to be loaded and rendered in reasonable time (within the maximum lifetime of a Lambda) given the limited resources offered by Lambda functions (maximum 6 vCPUs and 10GB of RAM at the time of writing). If you have to render more complex assets, going with EC2 instances or AWS Thinkbox Deadline is a better solution.

When I came up with the idea of running Blender on Lambda, I naturally checked if someone was doing it somewhere and found out that @awsgeek had done something similar in 2019 so at least I knew it was possible. What we are going to present in this article slightly differs from his approach though.

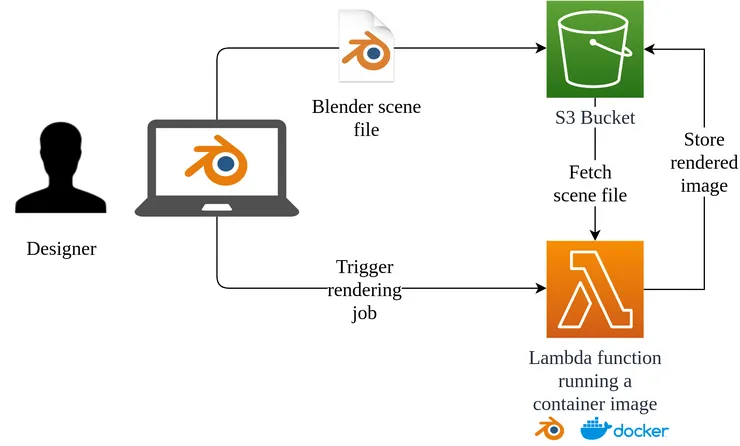

The architecture

Here is the very simple architecture that allows you to render Blender scenes on AWS Lambda stored on an S3 Bucket. The final renders are stored into the same bucket.

The files you will need to run this “at home” (in the cloud)

To run this on your own AWS account, you will need four files:

Dockerfiledescribes the container image that each lambda function execution is going to runserverless.ymldescribes the infrastructure aboveapp.pyhandles the event from the Lambda infrastructure and starts up blenderscript.pymanipulates Blender from the inside to lad a scene and render it.

File structure

Here is how these files should be arranged:

project directory

+-- Dockerfile

+-- serverless.yml

+-- app

| +-- app.py

| +-- script.py

Dockerfile

For the Dockerfile, I started from a great set of Docker images for Blender maintained by the R&D team of the New York Times. We’re using the latest image that runs Blender rendering with CPU only (as Lambdas don’t have GPU capabilities unfortunately).

FROM nytimes/blender:2.93-cpu-ubuntu18.04

ARG FUNCTION_DIR="/home/app/"

RUN mkdir -p ${FUNCTION_DIR}

COPY app/* ${FUNCTION_DIR}

RUN pip install boto3

RUN pip install awslambdaric --target ${FUNCTION_DIR}

WORKDIR ${FUNCTION_DIR}

ENTRYPOINT [ "/bin/2.93/python/bin/python3.9", "-m", "awslambdaric" ]

CMD [ "app.handler" ]

Serverless configuration file

The serverless.yml file is fairly simple:

service: blender-on-lambda

frameworkVersion: '2'

configValidationMode: error

custom:

destinationBucket: blender-on-lambda-bucket

provider:

name: aws

region: us-east-1

memorySize: 10240

timeout: 900

ecr:

images:

blender-container-image:

path: ./

iamRoleStatements:

- Effect: "Allow"

Action:

- "s3:PutObject"

- "s3:GetObject"

Resource:

Fn::Join:

- ""

- - "arn:aws:s3:::"

- "${self:custom.destinationBucket}"

- "/*"

functions:

render:

image:

name: blender-container-image

maximumRetryAttempts: 0

resources:

Resources:

S3BucketOutputs:

Type: AWS::S3::Bucket

Properties:

BucketName: ${self:custom.destinationBucket}

In this example, I am using the largest available Lambda functions with 10GB or RAM and 6 vCPUs so that the rendering goes as fast as possible.

The Python Lambda handler

The app.py file contains the logic that will be triggered by the Lambda infrastructure and it will receive the event passed as a trigger.

In this case, the event must contain the width and height of the image to be rendered.

import json

import os

def handler(event, context):

os.system(f"blender -b -P script.py -- {event.get('width', 0)} {event.get('height', 0)}")

return {

"statusCode": 200,

"body": json.dumps({"message": 'ok'})

}

The Python Blender script

And finally, here is the python script that will be executed from inside Blender to do the actual rendering.

from datetime import datetime

import os

import sys

import boto3

import bpy

argv = sys.argv

argv = argv[argv.index("--") + 1:]

s3 = boto3.resource("s3")

BUCKET_NAME = "jrb-material-renderer-bucket"

filename = f"{datetime.now().strftime('%Y_%m_%d-%I:%M:%S_%p')}.png"

s3.Bucket(BUCKET_NAME).download_file("scenes/scene.blend", "/tmp/scene.blend")

bpy.ops.wm.open_mainfile(filepath="/tmp/scene.blend", load_ui=False)

bpy.context.scene.render.filepath = f"/tmp/{filename}"

bpy.context.scene.render.resolution_x = int(argv[0])

bpy.context.scene.render.resolution_y = int(argv[1])

bpy.ops.render.render(write_still = True)

s3.Bucket(BUCKET_NAME).upload_file(f"/tmp/{filename}", f"renders/{filename}")

Cost

In the us-east-1 region of AWS, the largest available lambda will cost you $0.01 per minute. The request charge (the amount charged for every invocation) is probably negligible in comparison. Also, bear in mind that the generous free tier of Lambda might cover your costs! Same for S3.

I hope this can help anyone facing the same need that I did.

If you’re interested in offloading your Blender render jobs to the cloud, follow me on Twitter. I’ll be posting more content in the coming weeks such as:

- cost comparison with using other cloud providers

- the cost impact of using GPU-enabled EC2 instances instead of Lambda

- leveraging AWS EFS instead of S3 to load the scene into Blender

If there are other topics you’d be interested in, let me know on Twitter! My DMs are open.