Mastering OpenAI's GPT Model Function Calling for E-commerce

Timothée Rouquette8 min read

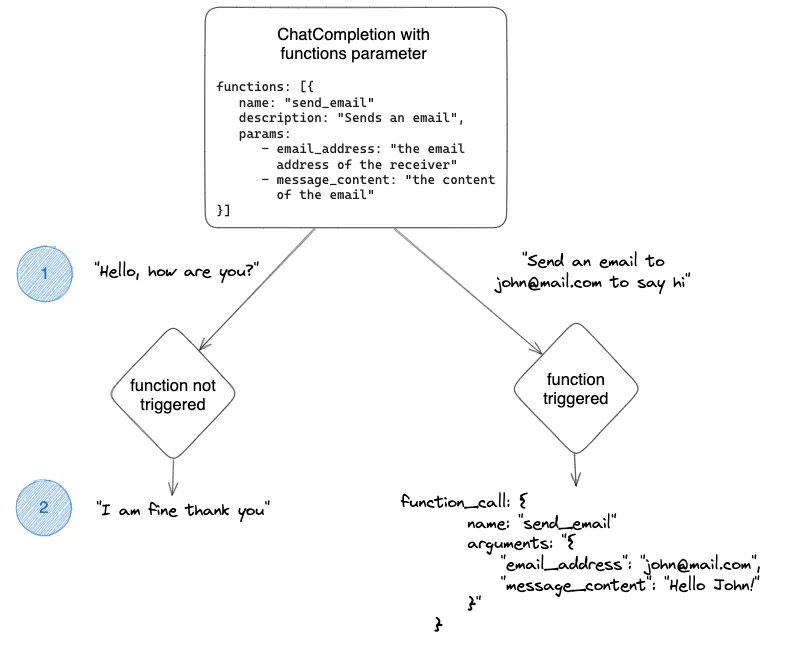

Function calling allows your conversational agent to connect to external tools. The key lies in correctly defining the functions to GPT’s model, which can be a bit tricky.

As a software engineer at Theodo, I have been working with the OpenAI API for quite some time now. Mastering the art of prompting to build virtual assistants for various purposes, with a particular emphasis on e-commerce applications. Recently, I have been experimenting the possibilities of GPT function calling.

If you have been using the Chat Completion endpoint, you may have experimented with the optional functions parameter. You may have tried to define your functions as precisely as possible, but found that they are not triggering or may even be competing with each other. Perhaps the properties you describe do not match with the results you are expecting.

In this article, we will explore methods to enhance the functions parameter. I will also share some tips that I have learned from my personal experience.

📄 Index :

Function calling overview 🧠

If you are already familiar with function calling, you may skip this part. However, if you have no idea how it works, you may want to read a quick explanation.

In the traditional way to use the Chat Completion endpoint, we define a list of messages representing a conversation between a user and an assistant. The API will simply send back an answer that completes the conversation.

Now, the model can detect an intention from the user to trigger a function. All we need is to do is define these functions to the model. When defining functions, the GPT model will be able to generate a JSON object that contains the necessary arguments to call those functions.

Ex: We define a “send_email” function

Here is how they describe the mechanism in the api guide

These models are fine-tuned to detect when a function needs to be called based on user input and respond with JSON that conforms to the function signature. Function calling enables developers to more reliably obtain structured data from the model.

Let’s write some functions 🧑💻

For the purpose of this article, we will focus on developing an assistant that can help with customer service requests.

Assuming that we have multiple functions at our disposal to initiate actions on our e-commerce website:

returnOrder({ order_number }): creates a new return on the website.shopOpeningHours({ nameOfTheShop }): returns the opening hours of a specific shop.orderStatus({ order_number }): returns the current status of the order.

These three functions serve simple, yet specific purposes in a classic hypothetical e-commerce website.

To start creating your assistant, we will begin with the function called returnOrder. The call will include a brief system context and a basic user message:

openai.createChatCompletion({

model: 'gpt-3.5-turbo',

messages: [

{

role: 'system',

content: 'You are an customer support assistant for an ecommerce website'

},

{

role: 'user',

content: 'I want to make a return for my order'

},

],

functions: [

{

name: 'return_order',

description:

'Creates an order return',

parameters: {

type: 'object',

properties: {

orderCode: {

type: 'string',

description: 'the code of the order',

},

},

},

},

]

})

That is it! We now have a fully automated user support AI agent capable of returning orders. Sounds great right? This is what I believed when I first tried it… Below, we will examine some encountered issues and suggest improvements to address them.

1. Use required parameters

When executing our initial version of the code, we receive the following response:

function_call: { name: 'returnOrder', arguments: '{ "orderCode": "12345"}' }

Although the user didn’t mention the code of their order, GPT called the function with a made-up code.

In the parameters object, we can define a required field as a list of properties that need to be defined for the function to be called. We add ‘orderCode’ in that list.

parameters: {

type: 'object',

properties: {

orderCode: {

type: 'string',

description: 'the code of the order',

},

},

required: ['orderCode'],

}

When executing the new code, the function is not called because the user did not provide the orderCode. The response message is: “Sure, I can help you with that. Can you please provide me with the order code for the return?”.

You may now test the new code with different user requests that may or may not include the order code like the following: ‘I want to make a return for my order, the code is OC-42424242’. With the provided orderCode, the function will work correctly:

function_call: { name: 'returnOrder', arguments: '{"orderCode": "OC-42424242"}' }

-

It works with follow-up discussions

If the user mentions the order code later in the discussion, we will still achieve the same result.

{ role: 'system', content: 'You are an customer support assistant for an ecommerce website' }, { role: 'user', content: 'I want to make a return for my order' }, { role: 'assistant', content: 'Sure, I can help you with that. Can you please provide me with the order code for the return?' }, { role: 'user', content: '0C-42424242' }

2. Use examples

Now, let’s imagine that our order codes follow a specific format: ‘OC-xxxxxxxx’, where x represents numbers. If the user only mentions the number, we won’t be able to call the returnOrder function with the correct code. For example: ‘I want to make a return for my order, the code is 55667788’.

function_call: { name: 'returnOrder', arguments: '{"orderCode": "55667788"}' }

Here the answer is not quite what we wanted, we need the arguments object to give us the orderCode in a specific format. We added the requirement that ‘it should start with OC-’. However I noticed ChatGPT would give us a correct format only half the time. This can be mitigated with examples.

Our parameters will look like this :

parameters: {

type: 'object',

properties: {

orderCode: {

type: 'string',

description: 'the code of the order, it should start with OC- e.g. OC-42424242, OC-42424243, ...',

},

},

required: ['orderCode'],

}

Here is the answer :

function_call: { name: 'returnOrder', arguments: '{"orderCode": "OC-55667788"}' }

3. Use enums

Let’s now focus on the shopOpeningTime function. Here is how we first define it:

{

name: 'shopOpeningHours',

description:

'Returns the opening hours of a shop',

parameters: {

type: 'object',

properties: {

shopName: {

type: 'string',

description: 'the name of the shop',

},

},

required: ['shopName'],

},

}

Our firm has three shops: Honolulu, Belharra and Peniche. We already put the name of the shop as required so the function will trigger only when the user mentions a shop name

We test the following prompt: ‘What are the opening hours of the bellara shop?’

function_call: { name: 'shopOpeningHours', arguments: '{"shopName": "bellara"}' }

Great! We called the function! However, we didn’t receive the open hours info from the shop… The user misspelled the name of the shop that is why we couldn’t retrieve the info.

To address this issue we are going to add the enum list to the shopName property as follows :

parameters: {

type: 'object',

properties: {

shopName: {

type: 'string',

description: 'the name of the shop',

enum: ['Honolulu', 'Belharra', 'Peniche'],

},

},

required: ['shopName'],

},

The correct name of the shop has been detected, and misspelled name has been fixed. The capitalized format has also been corrected.

function_call: { name: 'shopOpeningHours', arguments: '{"shopName": "Belharra"}'}

4. Limit the number of functions

One important thing to understand is that when you mention more than one function, they might enter into competition, especially if their definitions are close. This can result in calling the wrong function. So, you want to keep in mind what will differentiate them.

For example with the following 2 functions:

const orderLocation = {

name: 'orderLocation',

description:

'Returns the location of an order',

parameters: {

...

}

}

const orderStatus = {

name: 'orderStatus',

description:

'Returns the status of an order',

parameters: {

...

}

}

We attempted to inquire about the status of the order by asking, “Was my order shipped?” The model responded with ‘orderLocation’ on the first occasion and ‘orderStatus’ on the second occasion.

In this case, I recommend keeping only the orderStatus function. Adapt it in your code so that the information about the location can be passed to the next call, making the answer even more accurate.

You can learn more about conversation track in this OpenAI cookbook

5. Limit the size of the descriptions

When describing your functions and their parameters, there are a few things to keep in mind.

- First, you may encounter a context limit. This means that you have exceeded the maximum number of tokens and need to shorten your descriptions to fit within the limit (4097 tokens for

gpt-3.5-turbo). - Additionally, functions perform better when they are concise and strait-to-the-point. A common mistake is to excessively expand descriptions in order to ensure that the model fully comprehends our instructions. However, this approach actually adds unnecessary background noise and hinders the model’s functionality.

- Furthermore, there is an economic argument: the functions are billed based as input tokens. Therefore, keep in mind that the larger your description, the higher the cost.

Conclusion 💭

I hope these tips and suggestions will provide you with valuable guidance and support for your current and upcoming projects. I encourage you to read GPT best practices, which can help you improve your strategy for building a general conversational agent. These best practices go beyond just function parameters and will assist you in structuring the architecture of your virtual assistant.