Docker is so cool...until it's not

Ghali Elalaoui Elabdellaoui8 min read

Docker is a popular platform for developing, shipping, and running applications. It uses containers, which are isolated environments that allow developers to package an application and its dependencies into a single unit. Docker makes it easy to deploy and run applications on any environment, from a developer’s laptop to a production server.

The concept of virtual machines and containers have a long history in the world of computing. Virtual machines have been around since the 1960s, and they provide a way to run multiple operating systems on a single physical machine. Containers, on the other hand, are a more recent development that builds on the concept of virtualization.

Despite its many benefits, there are also some limitations and security threats associated with using Docker. One of the main limitations is that containers share the host operating system’s kernel, which can result in security vulnerabilities if the host is compromised. Additionally, it can be difficult to manage and monitor the resource usage of containers, which can lead to performance issues.

Despite these limitations, Docker continues to be a popular platform for building and deploying applications, due to its ease of use, flexibility, and portability. To understand more about Docker and its security considerations, it’s worth exploring the topic further.

VM vs Containers

What’s a VM?

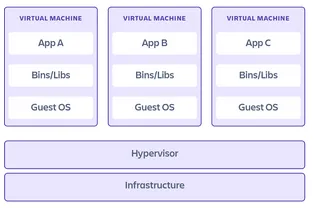

Virtual machines revolutianized the IT industry. They are a virtual representation of a physical computer, which helped solving many problems. For one, you could have a powerful server running multiple virtual machines at once, which memory and computing power could be adapted on the go following the demand. Also if a system crashes we could easily export the virtual machine’s environment and deploy it on a new server drastically reducing downtime. But soon enough, even virtual machines had their limits. Having multiple full operating systems running at once on the same hardware is very costly, and configuring each of them is as complex and time consuming as running an operating system directly on the hardware. Furthermore, managing the shared resources is a complex and tedious task that must be done with great care and often manually.

What is a container?

Containers were created to surpass virtual machines’ limitations, and to understand how that works, we need first to understand how a computer works.

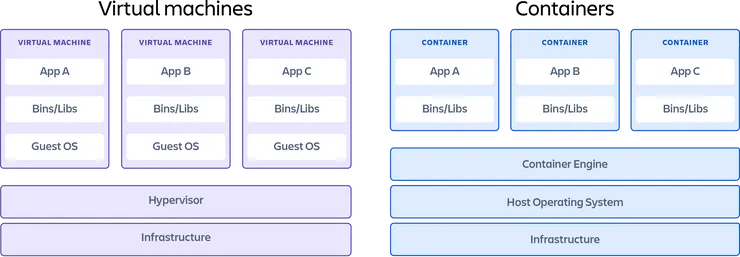

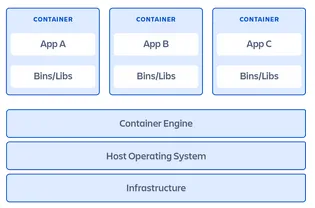

In contrast to virtual machine that runs a complete operating system on the host, containers run as a software package that includes all the required dependencies to execute a software application. It installs the necessary libraries and dependencies as well as applications on top of a container engine that is itself running as a software on top of the operating system. This architecture allows to skip the hypervisor and guest OS layers which results in much greater speed, flexibility and scalability.

difference in architecture between virtual machines and containers

The concept of containers is not new by any means. But creating a container was a very complexe task a few years ago that required very deep linux knowledge.

Some history

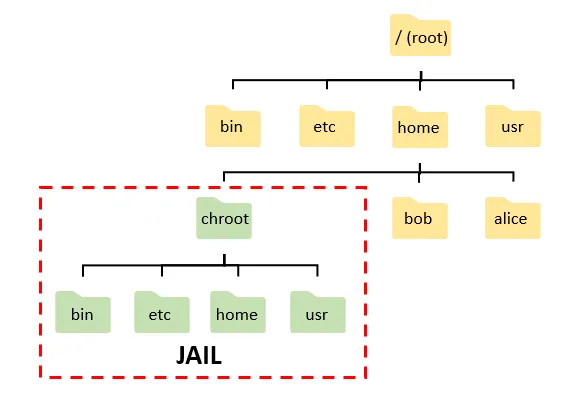

Chroot

Containers (or at least the underlying technologies behind them) have been around for a long time, starting with the chroot command in 1979 that allowed the user to set a custom root directory for a process giving thus one of the important key features of containers: isolation. This feature was later extended with jails (2000) to support isolated network interfaces. These features birthed linux v-server and open VZ as kernel layer virtualization systems that could create a linux environment with isolated directories and networks, but setting up these containers was a complex process and due to the unpopularity of the concept at the time, they were wrongfully marketed as light-weight VM frustrating users who expected to find complete virtual machines.

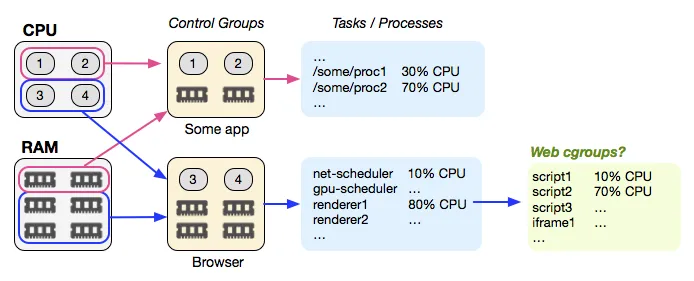

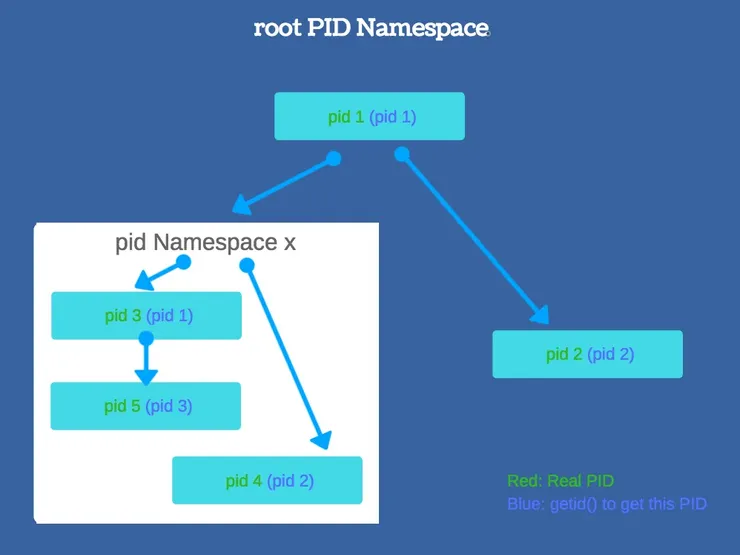

Cgroups and linux namespaces

In 2008, a new key feature was added in linux called cgroups. It is a linux kernel feature providing isolated resources allocation (RAM, CPU, disk, network etc..) for groups of processes. A few years later, cgroups were expended using linux namespaces that partition linux kernel resources, so that a process cannot see resources of another namespace further reinforcing the isolation aspect of containers.

Docker

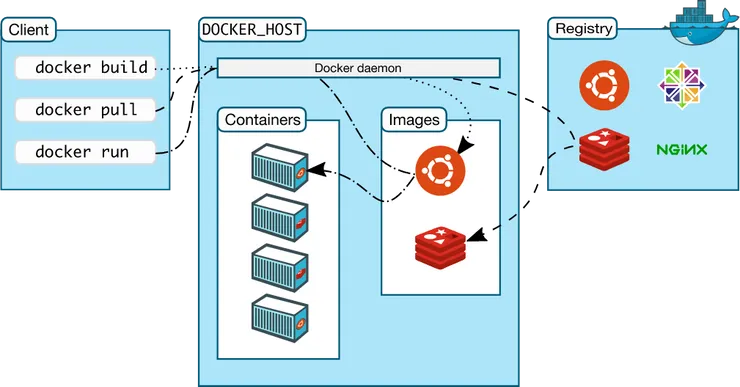

Then docker was created, and with it the key concept that made containers what they are today: images. Using only a Dockerfile which is a file that holds all the information about a given image, anyone can now execute one command and have a running container with all the necessary dependencies. These images can then be saved in Docker registries, which are libraries of images where anyone can add a custom image saved on the cloud for easy sharing and deployment, each image will be paired with a versioned tag for easy execution.

Docker also implemented the Docker cli, which is a command line application that allows developers to create and interact with their containers through the terminal by executing simple commands that are translated and communicated to the Docker engine running on the background through a rest API.

For instance, docker pull postgres : command to pull the latest Docker image for postgres

docker pull arm32v5/nginx:1.22 : command to pull the Docker image of nginx version 1.22 on arm32v5 architecture

Using docker, we could now have a development environment that matches exactly the production environment limiting the “it works on my machine” issue and allowing Docker to have one more key feature: portability.

Docker Limits

Cross OS containers

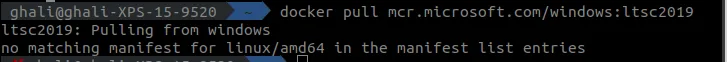

Yet, docker still has some limitations compared to virtual machines, and the biggest one is that the containers are still running on the host OS’s kernel, which means that we can only run images that are compatible with our computers’ kernel, that is the reason why we cannot directly run an image of windows server on docker for linux.

As you can see we get an error on ubuntu when trying to pull a windows Docker image on linux.

This same architecture is why you may find problems on a Macbook with an apple silicon processor (M1 for example) with a Docker image that works perfectly on another machine, every command is run through the host’s kernel, so if a command needs a kernel instruction that exist on x86 architecture computer that have Complex Instruction Set Computer architecture or CISC (like Intel processors) that doesn’t exist on ARM computers that have Reduced Instruction Set Computer architecture or RISC (like Apple silicon), it will crash because the CPU does not recognize the run instruction. It should be noted that Apple has included a translation layer for Apple Silicon computers called Rosetta to run x86 apps on Apple silicon, this can be used to run x86 docker images on new macs, but it is far from perfect, and it is preferable to use arm images whenever it’s possible.

And for anyone wondering why we can run linux docker images on windows, that is because Microsoft has been working the last few years to make Windows more developer friendly, and has implemented a Linux virtual machine called WSL by default on Windows 10 and 11 so linux Docker images run directly on WSL.

Security issues

Docker has a very known security issue because it runs as root if we install it using the recommanded method from the documentation.

Most people ignore the red tooltip in the docker post installation steps web page, which is not a big deal for personal use, but on a server we should absolutely not install docker this way, otherwise we could give hackers root access to the server.

You can test

Privilege escalation using docker

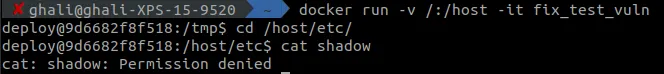

You can test this vulnerability for yourself.

You need to run a linux distribution, or run a linux’s virtual machine (ubuntu, kali, debian etc..)

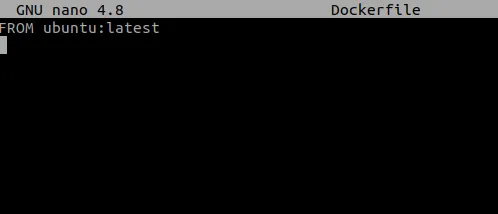

Then download docker following the guide and then follow the default Docker post installation steps. You can then write a dummy Dockerfile:

Then build the image with: docker build -t my-vulnerable_image .

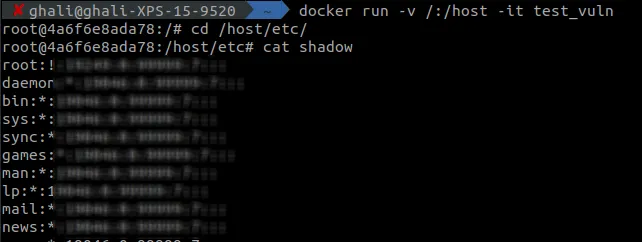

You can now start your container mounting the root directory in a random directory on the container for example: docker run -v /:/host -it my_vulnerable_image

Now you’ll be connected to container’s cli, and through it, you can run any command as root, for example running: cat /host/etc/shadow and you’ll be able to see all the users’ passwords (hashed yes, but still very dangerous)

Mitigation

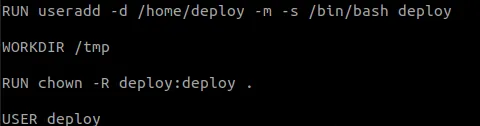

To solve this issue, we need to create a user on the container which won’t have the root access, so we just need to add the following lines to the Dockerfile:

And now all the commands will be ran through the deploy user and not root.

This solution can be used on any docker image, yet if you are working on a server and not on a personal machine, you can’t leave any place for doubt, and you should probably follow docker’s guide on running docker as a non root user.

For more information:

For more information about life before and after docker

For more information about what hypervisors For more information about windows WSL

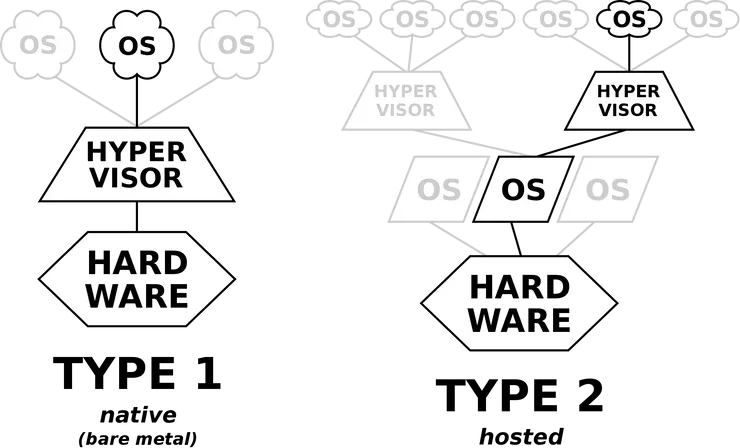

For info: the reason WSL is better and faster than a “standard” Linux Virtual Machine is that it runs on Microsoft’s Hyper-V, which is a type-1 Hypervisor :

For more information about CISC and RISC architectures

For more information about problems with docker on Apple Silicon

For more information about docker registry (and how to create one) and docker hub