Optimising screen load times for legacy low-end devices in React Native

Mo Khazali9 min read

You’ve developed your React Native application and you’re ready to release it out to the public. All is going well, until you start testing on real phones. While your app is perfectly smooth and snappy on your shiny new iPhone 14 Pro, others give you a different story…

An Android user reports that it takes ages to navigate between each screen in the app!

.BUiRzERj_Zl0Smx.webp)

Off go the alarm bells… 🚨

.D0XdDPSv_Z3dhzi.webp)

Having a slow app is not only frustrating to your users but has a real material risk to your business:

A Google study found that Furniture World was able to increase their mobile conversion rate by 10% when they reduced their page load time by 20%.

Source: Think With Google

Context

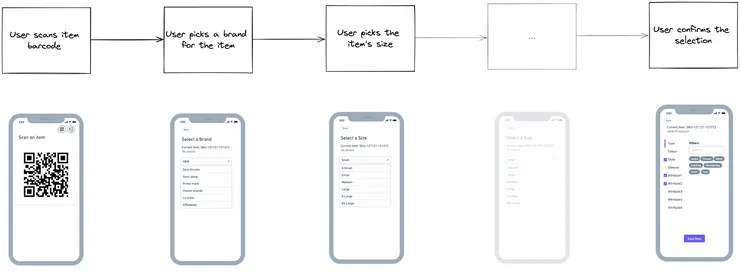

The findings of this article are largely derived from problems we faced when developing an app for a client in the fashion market. Android users were experience slowness with some of the main flows of the app. The flow we were looking to optimise was one where the user was inputting data about a particular item of clothing, including: product category, size, colour, etc. They’d start off by scanning a barcode for the item, and they’d then be led through a series of screens to input data for item (usually in selectable lists). At the end of the flow, they’d be shown a review screen where they confirm all the data they had inputted, and save the item.

The flow looked something like this:

Diagnostics

Our first step was to figure out why there was such a performance discrepancy between the iPhone speed vs. the Android user who reported the slowness. Our primary question was:

Is this an OS or hardware related problem?

Initially, we assessed the application on a number of flagship Android phones to see if the app was performing slowly across all Android devices, pointing to an issue with OS compatibility. The experiments showed that on 3 different flagships from 3 popular Android manufacturers, load times were comparable to that of our iPhone users.

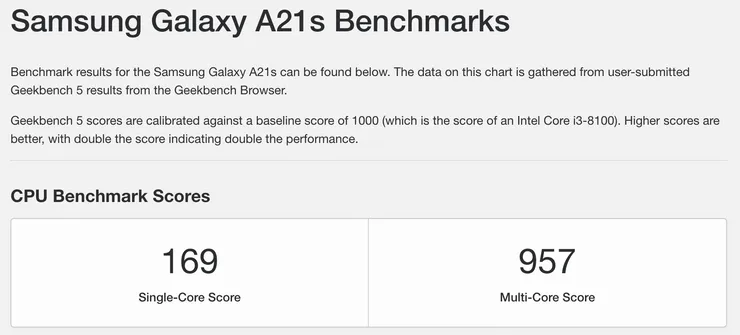

We diverted our attention to the individual user who had reported the slow behaviour. We asked them to submit their Android version and phone model to investigate. We quickly realised that we were facing a hardware limitation. Despite the phone being a 2020 Samsung phone, the CPU/GPU benchmark scores were truly awful:

For context, the single core scores here are about the same as the Galaxy S5… 🤯 That was a phone released back in 2014, before React Native even existed. Furthermore, the multicore score was slower than the single-core score of the iPhone XR, a phone from 2018. This lower tier entry-level Android phone market was something we hadn’t considered when testing our application.

We quickly realised that we’d need to optimise for this range of smartphones.

To start with, we measured the time in between different screens on this specific phone. Although it varied depending on the particular screen of the app, we found that it was taking an average of 6 seconds between a tap and navigating the user to the next screen. This made the app practically unusable.

Goal

We wanted to ensure that the application was usable for phones with low computing resources. We set this phone as our benchmark and set ourselves a goal of reducing the time in between screens to lower than 1 second.

If you’re interested in learning more about choosing a benchmark device for measuring performance, we have an article talking about how we select and measure performance at Theodo Group.

Experiments

We ran the following experiments and recorded the amount of time that was reduced between screen loads:

Batching Requests to the Backend

In some of our major flows where we had multiple screens of related data being entered by users, we were sending information to the backend after every user input. For example, we had a stack of screens where the user was inputing data about an item of clothing. These screens were all connected to a context - whenever the user would select a value, an action would be dispatched to the context, and this would be applied to a reducer. After this action was handled by the reducer, the user would be navigated to the next screen.

Initially, this context was using a useEffect to fire PATCH requests to the backend whenever the reducer’s state was changed. Effectively, this meant that after every data input, a fetch request was being sent to the backend before the user would get navigated to the next screen.

We first checked to make sure that we weren’t awaiting these requests, as server latency could easily cause the app’s navigation to become choppy. To our surprise, we were not…

After further investigation, we found that despite not waiting for a response from the backend, the firing of the request would add a bit of overhead which made the screen transition slower.

To reduce this delay, we replaced the useEffect with a useCallback that would only fire a PATCH request when invoked. We called this method at the end of the stack when the user had gone through all of the screens and inputted all of the relevant fields.

A drawback to this approach is that if the user was in the midst of going through the screens, and they didn’t complete inputting the data - say they got interrupted for a long period of time, or the app crashed - their half-entered data wouldn’t be saved to the backend and they’d need to repeat the process.

This reduced the time between screens by 1 second, down to a total of 5 seconds

Optimising React Navigation

Navigation in React Native has evolved over time to allow for better performance and smoother transitions. Traditionally, screen transitions and navigations were handled by the JS thread, which resulted in slow and choppy behaviour. React Navigation was largely created for this reason - there was a real need to make navigation seamless, and offload those responsibilities to the native layer.

We found that having too many stacks (and screens on the stack navigator) at the same time (unsurprisingly) increases the amount of memory the app uses.

Here are a few quick wins for optimising with React Navigation:

- Try to call

navigation.reset()explicitly when you know that you don’t need any of the previous screens anymore. - Having a large number of stack navigators (that are nested) will increase load on your RAM. Try to combine feature navigators where they make sense. This includes minimising the nesting of navigators. Read more about it here.

- Use the

NativeStackNavigatoras opposed to the default JS basedStackNavigator(if you don’t need the additional customisation). - Check if you’re using the latest major version of React Navigation.

- Over the years, the library has been overhauled and optimised to improve performance.

- For example: Before v2.1, the library used regular

Views under the hood, which wouldn’t come with the same performance optimisations as the native level’s screens (UIViewControllerfor iOS, andFragmentActivityfor Android respectively). After v2.1, it was recommended you usereact-native-screensto benefit from the native screen optimisations, although you had to manually calluseScreens()to activate it. Nowadays, this is the default behaviour with React Navigation.

- For example: Before v2.1, the library used regular

- The Opendoor team gained massive performance improvements by upgrading React Navigation from v1 → v5. You can read about it in their article here.

- Over the years, the library has been overhauled and optimised to improve performance.

Overall, minor React Navigation optimisations reduced the time between screens by around 500ms, down to an average of 4.5 seconds.

This was barely noticeable.

Unmounting computationally expensive elements after navigating away

During our investigation, we observed that after every additional screen in a stack, the load time would marginally increase. We suspected that parts of these screens’ states were being kept in memory, despite the screens not being focused.

We looked at these screens and found that we were rendering FlatLists that had dozens of rows of data within each of them. If these were being left mounted after the screen was no longer in focus, they would quickly start filling up our device’s memory.

To test out if this was the issue, we used a hook from the React Navigation library called useFocusEffect, which is triggered when a screen:

- Comes into focus

- Becomes unfocused (on blur)

We inserted a useFocusEffect into a computationally expensive component (our SearchableFlatList), and set it to update the state of a boolean useState variable depending on whether the screen is focused or not. Depending on the value of the boolean, we would render the component. Effectively, this would unmount our component when the screen goes out of focus.

The resulting code was something like this:

You can implement this on a component by component level, which gives you the option to select which computationally expensive components you want to unmount when the screen goes out of focus.

The code above is the most basic version of getting this to work. In effect, this code will have a split second where the screen unmounts the component and then navigates away. This isn’t the prettiest thing to show your users, but fear not… You can use the InteractionManager to delay the effect until after the transition completes. You can find examples of this in the React Navigation docs.

A common culprit for this issue is some inefficiencies between your stack of screens and the app’s state management. In our case, there was a multi-page form that was hooked up to a React Context. With the components being kept mounted in the background, on every update to the context, there would be a potential re-render that was happening for all of the lists in the background.

It helps to be aware of the necessary render optimisations, and make conscious choices around modelling your app’s global state management. One improvement point is to use libraries like use-context-selector instead of the default React context for your app state. Libraries like this come with performance optimisations that reduce the number of re-renders that a component does.

This change was the one that really made a big difference! Our loading time was reduced by a staggering 4 seconds, and ultimately brought our total navigation time down to an average of 0.5 seconds!

.Cir4iEj4_Z2kxsgt.webp)

Wrap-up and Learnings

In the end, our performance crisis was averted. Our application was re-tested by our users with entry-level Android phones, and they reported that it was usable and quick (or I guess as quick as their phones got). 🎉

We had two main learnings from this experience:

- Early user testing is incredibly important and saves us from the headache of these problems surfacing close to release. Define a baseline phone that you want to support, and make sure that you’re consistently testing on these devices as you build your app.

- Make sure your components aren’t being left to re-render under the hood when you navigate away. This all ties back to what you’re mounting/unmounting on focus of a screen, but active architectural choices such as using context selectors can help in optimising your load times.