Keep your DevX Problems at Bay: the Kaizen

Alexis Reymann10 min read

Kai: change, Zen: good

This is the story of Bill. Bill is a full-stack developer at DevYourSite Inc.

Bill has the same developer life as you, that is, he is given a feature to implement, he thinks about how he’d design it, then he implements it, tests it and pushes it to production.

Bill’s project is quite standard, being mostly Typescript for frontend and Java for backend, plus Kubernetes to manage both production’s infrastructure and CI/CD.

Just a normal developer life.

Well, that’s obviously just the theory. We do all know how many obstacles there are on our way to deliver the features our clients want.

For example, Bill has got some CI problems lately. A fair few jobs on his pipelines tend to fail for no apparent reason. But the flakiness just vanishes after a couple restarts so maybe there isn’t much to do about it.

One day though, a new senior developer joins the team: Sam. Upon seeing the state of the project’s CI, Sam starts telling the tale of a frog placed in a pot of tepid water. The pot is on a hot stove that gradually heats the water until it is brought to a boil. The frog does not mind the regular and small changes in water temperature until it dies cooked to death. Whereas put in an already boiling water, that frog would jump out immediately and save its own life.

Bill is a developer. He understands the link between this story and their current developer life: he and his team have grown accustomed to the decaying state of their CI to the point where it’s not bearable anymore. Some colleagues have left the team recently, and it might actually be because of this.

Sam has already seen this in previous jobs. And she knows one thing is for sure: solving this problem is not going to be easy at all.

Good will

Bill is of good intent. He is concerned about this CI problem, and plans to solve it so that everyone can benefit from his work as soon as possible.

Bill starts digging into the CI logs to understand what exactly is wrong with them. He finds one at random that says “Your local changes to the following files would be overwritten by checkout” followed by a list of files with various names. Bill commences a solving process:

- Try and understand what this error message means

- Look it up on StackOverflow

- Fix how git branches are merged in the CI pods by experimenting with git CLI flags

- Once this specific case works, merge a PR

Looks nice! Already one problem down. Or so Bill thought…

The next day, some other CI pipelines seem to fail very early in the process. This generates frustration for the dev team which needs to hotfix the production branch. And the bug investigation goes back to yesterday’s commit.

Sam enters the room and starts exchanging with Bill. They try to understand what went wrong from the observed consequences all the way back to the root cause which led to this issue. In this case, they actually identify several causes: Bill had an intuition that did not get confirmed by actual facts, nor did it get challenged by some other party before being merged. Bill also finds it hard to explain what the fix actually does compared to the previous version, meaning he did not learn much while solving the problem.

All this caused time loss and frustration for some devs. Exactly what a CI is not supposed to be! Sam now wants to propose a new frame to work on this CI issue as a team.

The method

As a very methodical and experienced developer, Sam gave herself a bit of time to set up the method. Time and people are needed for this tall order. The negotiation with management is tough, as new features still need to come out. She has to bring out the big guns. As she is being told that time spent on tech stuff is time not spent on producing what the factory produces, she interrupts with the following assessment: “Do you ever feel like the devs are slow? The recruitment process ensures senior developers are regularly hired, so why are we slow? Why do more than half of our production deployments get delayed because some critical feature is not completely finished? We do know part of where this all comes from, and I want to work on exactly that”. After some more discussions, she ends up getting some leeway and time to do this.

We first need to think about which people we want in our problem solving team. 2 or 3 devs for sure because they are necessarily impacted, but also someone that knows the ins and outs of the CI/CD workflows infrastructure. So a SysOps is also necessary.

The idea behind the process Sam wants to create is to have a sensible work repartition as well as letting everyone propose ideas and approaches to solve problems. As such teamwork needs meetings, some are going to happen. Not too many, half an hour a week with everybody is probably enough. Each member of the team must also have some time on their own to work on these issues. We will be solving these problems incrementally, with an emphasis on the quality of the proposed solutions. No need to hurry.

Once this was all set, Sam brought everyone together for the first meeting.

”Here’s a shared page where the devs can start writing down the different reasons for an unwanted CI failure. Any kind of failure. If possible, we will add some tags on each ticket so the devs can focus on, say, flaky tests, and SysOps can focus on infrastructure issues for example.

The important criteria here are:

- Visibility.

- We want to know precisely what the issue is, i.e. error message, on which job it happens… Give a screenshot and a link to a pipeline that yielded this error. Objective facts lets us create a consensus on what the problem we want to fix is.

- We want to know when a specific issue has been 100% dealt with. It also helps justify upon management what time was spent on not producing new features.

- Measurement. We want to have precise statistics on the state of the CI versus when some issues are solved in the future.

The SysOps proposes to write a script that reads the logs of failed CI pipelines in order to get a better view of which error messages tend to appear most often. “Great idea!” answers Sam. “Although we have to be careful to not spend too much time on perfecting this script, it will help us address the problems that have the most impact first.”

After some Q&A between all participating members, it was time to end the meeting and start populating the document with the various issues we came across.

The cycle

One week later. As planned, the shared document is populated with a bunch of issues. The SysOps also has some insights on which issues seem to be the most represented among all failures. Not all issues are categorized yet, but we’ll work on what we have for now.

Sam asks Bill to explain one of their issues to the team. “Well, the pod where the job runs simply disappears at some point, making the job abruptly stop and fail”.

”Can’t be sure as long as we haven’t investigated, but this looks like an Ops issue” says Sam. SysOps agrees to look into this issue first and try to spend some time on it in the upcoming days.

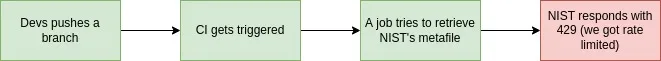

Sam has also found an interesting issue. Some CI jobs end with “Unable to download meta file: https://nvd.nist.gov/feeds/json/cve/1.1/nvdcve-1.1-modified.meta”.

No one knows what this URL is, except for SysOps who explains: “This file contains Common Vulnerabilities and Exposures (CVE), i.e. vulnerabilities associated with the package and the version they come from. The CI seems dependent on getting the metafile to check if the CVE have changed, but since we launch a lot of jobs each day, we might have been rate-limited. I’ll investigate this week”. After agreeing upon what shall be worked on before next week, the team ends the meeting here.

One week later.

In the CI-crash stats from the last couple of days, 90% less pods have disappeared, and the NIST rate-limiting error has vanished completely. “I’ve increased the capacity of our CI infrastructure, but some crashes still remain. These will be harder to tackle. As for the NIST error, we’ve simply created a cache for it that we refresh once a day only.” says the SysOps. “We’ve only seen a few days since these fixes, but it seems to have reduced the crashes by around 40%. Pretty good!“.

As this looks like clear wins for the team, Sam feels the need to add something: “In a Kaizen, we seek low-cost (or even 0-cost) actions to reduce our wastage. Here you increased the capacity of the infrastructure, and it might be the right move, but we have to be sure there is no other underlying cause to these pod crashes than just high usage at peak hours.”. SysOps nods and confirms this is the case.

The team then proceeds to present some newly documented issues and begin this one-week cycle again. Some issues take a bit more investigation than just a week because the team maintains the focus on quality. They want to understand the precise meaning of an error before fixing it. Hence, the “look it up on StackOverflow and trial and error with CLI flags” method is not accepted.

A few months later, very few unwanted crashes remain. The team has solved problems spanning from infrastructure to package-lock conflicts and memory issues. Everyone has learnt a lot about the tools they use everyday such as Kubernetes, npm or even prettier. It has also created a bunch of new practices for the development team, like some simpler precommit hooks and a better, less error-prone hotfix process.

What’s next? Well it seems our docker containers have trouble starting up the first time we launch them on our PCs. A good opportunity to apply the same method :)

Kaizen and its teachings

On most resources one can find about Kaizen, we will find 10 core principles. I would sum them up like this:

- Work with one small step at a time

- Be creative and test your assumptions

- Ask yourself ‘why’ over and over until you’ve found the root cause of your problem

- If you can do something, do it now

- Perfectionism is not needed

While some of these principles may seem obvious, the hard part is sticking to them during the entire Kaizen process. You may at some point be tempted to solve multiple issues at once, and one of the reasons why it’s a bad practice is that you will not learn as much as you could. This process enables everyone on the team to be very deeply aware of the problems that are being solved and their implications.

Because yes, Kaizen as well as most other concepts taken from the Toyota Production System (TPS) are about learning. Continuous improvement is about learning, and none of these can exist without the other one.